About NViz

What is NViz?

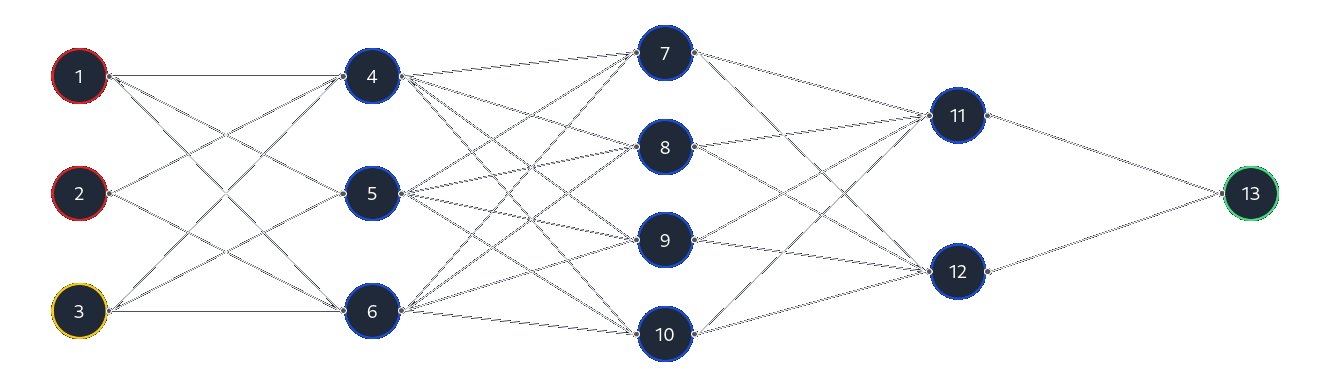

NViz is an online visualization platform for both the structure and training of multiplayer perceptron (MLP) feed-forward neural networks.

What can I use it for?

You can use NViz for training, visualizing, and experimenting with a variety of different feed-forward neural network architectures on your own training datasets. Simply provide a dataset in the correct format, adjust the settings as you see fit, and press train to see your neural network in action! You can provide an input file to test your model's predictions, or you can download the model's weights for your own use.

What is it made with?

NViz is made with Next.js and React with Tailwind for styling. It uses C++ and WebAssembly to train your model from directly within your browser at lightning-fast speeds!

Is it open source?

Absolutely! NViz is entirely open-source and available on GitHub. If you enjoy NViz and find it helpful, consider leaving a star on the repository! If not, please feel free to file an issue and describe how you feel it could be improved.

I'd like to try NViz, but I don't have a dataset...

NViz was created to help people develop a better understanding of the structure and training of neural networks on their own datasets, but if you just want to experiment with the site and explore its capabilities, that's perfectly fine! A number of feasible datasets are readily available on the GitHub repository for you to use. If you would like to train on your own dataset, follow the format guide for structuring your data files.

What are its limitations?

As mentioned, NViz can visualize and train multilayer perceptron feed-forward neural networks. While fairly general, these aren't as robust or versatile as other, more complex models such as recurrent neural networks (RNNs). NViz also allows a maximum of 1000 nodes in any individual layer to ease the load on your browser. Additionally, all models trained on NViz make use of the Sigmoid activation function at hidden layers. Sigmoid outputs are scaled from 0 to 1, so training data must have accordingly normalized outputs. Other activation functions such as ReLU and tanh may be better suited depending on the purpose of the neural network. In the future, NViz will support the selection of different activation functions based on model purpose.

Technical Information

What is the extra yellow node in the input layer?

The extra node in the input layer is called a bias. Consider it an extra, constant input that acts like a battery providing continuous power to your network.

What is training speed?

While JavaScript's event loop is considered non-blocking, it can in fact be blocked by tasks such as infinite while-loops, which is essentially what happens when you press "Train". To prevent event-loop blocking during this loop, after each training batch there is a 4 millisecond yield during which the event loop can process tasks such as handling a click on the "Stop training" button. The "training speed" setting is, effectively, the number of training pairs that are processed during each batch before the loop yields. The higher the "training speed", the more training pairs are processed during each batch. However, somewhat counterintuitively, a lower "training speed" setting may sometimes actually result in faster training, due to the nature of resource and load management. Setting the training speed too high may bottleneck the loop and result in actually slower training or even an unresponsive UI. Adjust training speed before and during training for best results.

I'm not getting the results I want...

Neural network model accuracy is highly dependant on features such as network architecture, training rate, and the quality and quantity of its training dataset. Use NViz as a platform to experiment with different architectures and settings! Perhaps refine your training dataset or reduce its size to prevent redundancy and increase the rate of training (effectively raising epochs/second). If no amount of experimentation is working, it might be the case that a simple MLP feed-forward network simply does not have the capacity to solve your problem, or an online platform may not be performant enough to quickly train on your dataset. Consider programming your own model from scratch using popular libraries like Pytorch or Tensorflow.